The complementary characteristics of active and passive depth sensing techniques motivate the fusion of the Li-DAR sensor and stereo camera for improved depth perception. Instead of directly fusing estimated depths across LiDAR and stereo modalities, we take advantages of the stereo matching network with two enhanced techniques: Input Fusion and Conditional Cost Volume Normalization (CCVNorm) on the LiDAR information. The proposed framework is generic and closely integrated with the cost volume component that is commonly utilized in stereo matching neural networks. We experimentally verify the efficacy and robustness of our method on the KITTI Stereo and Depth Completion datasets, obtaining favorable performance against various fusion strategies. Moreover, we demonstrate that, with a hierarchical extension of CCVNorm, the proposed method brings only slight overhead to the stereo matching network in terms of computation time and model size.

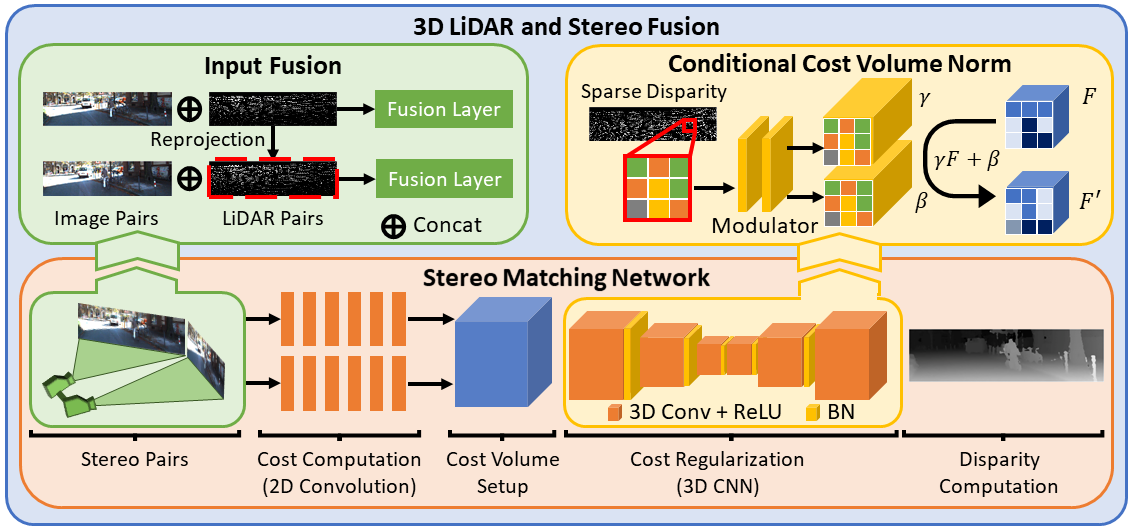

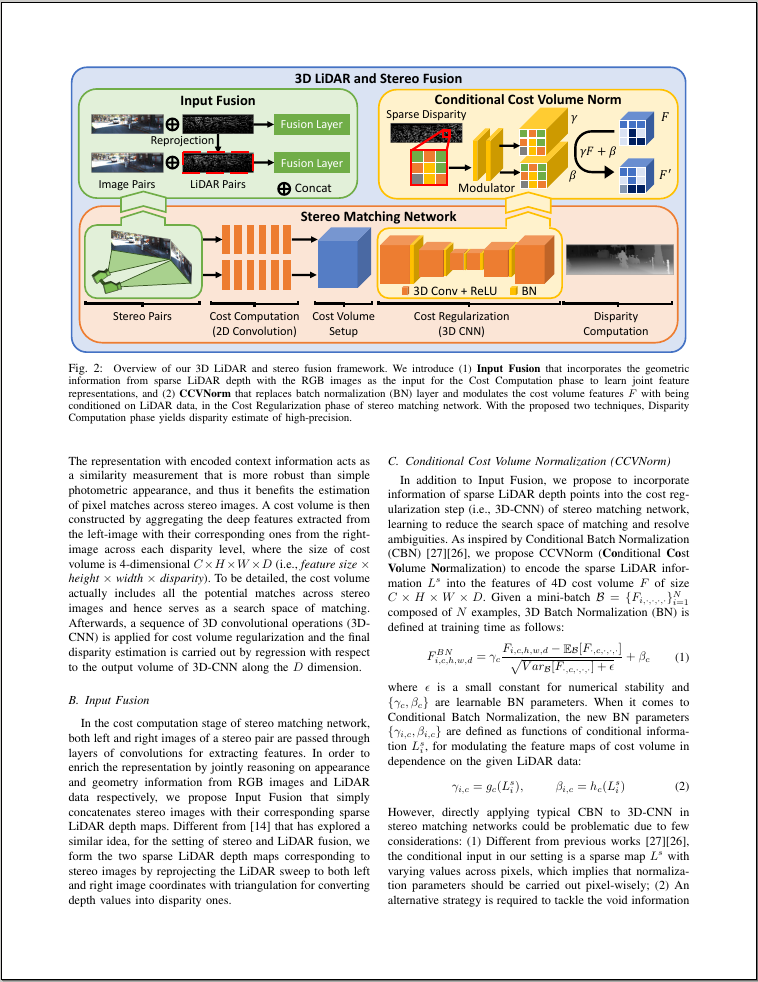

Overview of our 3D LiDAR and stereo fusion framework. We introduce (1) Input Fusion that incorporates the geometric information from sparse LiDAR depth with the RGB images as the input for the Cost Computation phase to learn joint feature representations, and (2) CCVNorm that replaces batch normalization (BN) layer and modulates the cost volume features F with being conditioned on LiDAR data, in the Cost Regularization phase of stereo matching network. With the proposed two techniques, Disparity Computation phase yields disparity estimate of high-precision.

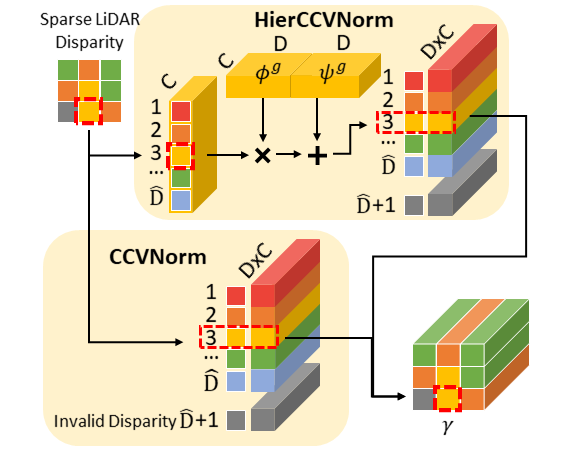

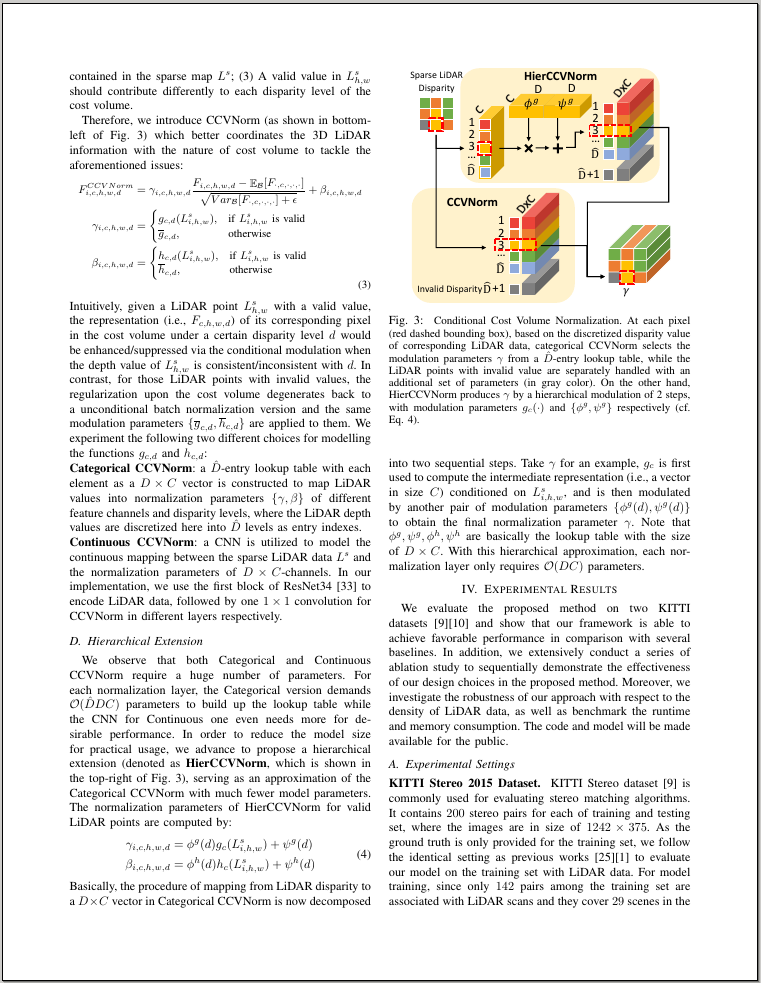

Conditional Cost Volume Normalization. At each pixel (red dashed bounding box), based on the discretized disparity value of

corresponding LiDAR data, categorical CCVNorm selects the modulation parameters γ from a D̂ lookup table,

while the LiDAR points with invalid value are separately handled with an additional set of parameters (in gray color). On the other hand,

HierCCVNorm produces γ by a hierarchical modulation of 2 steps, with modulation parameters gc (‧)

and {φg, ψg} respectively.

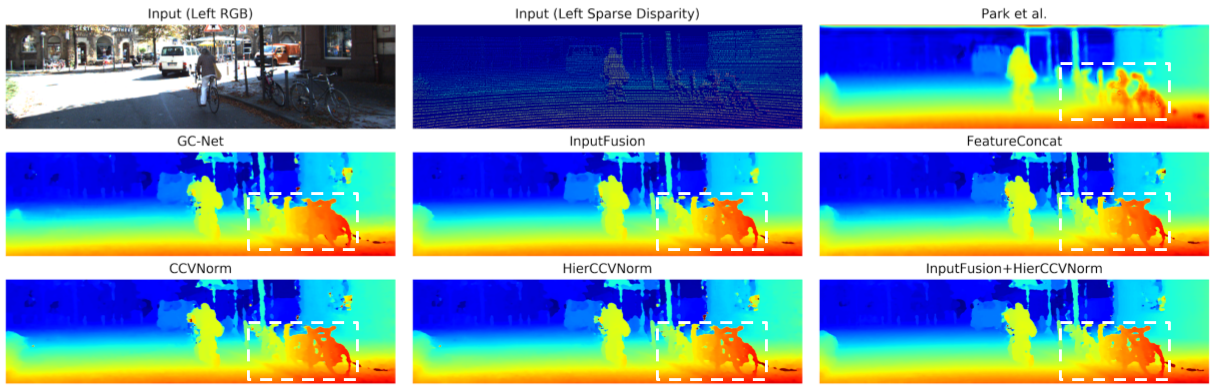

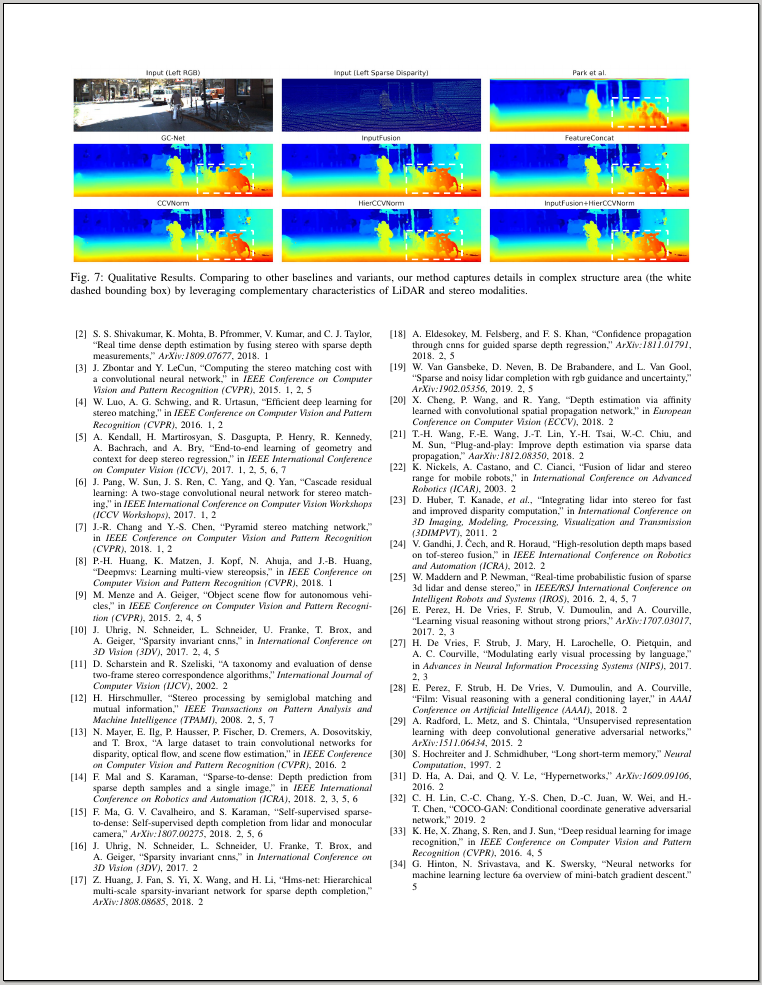

Qualitative Results. Comparing to other baselines and variants, our method captures details in complex structure area (the white dashed bounding box)

by leveraging complementary characteristics of LiDAR and stereo modalities.

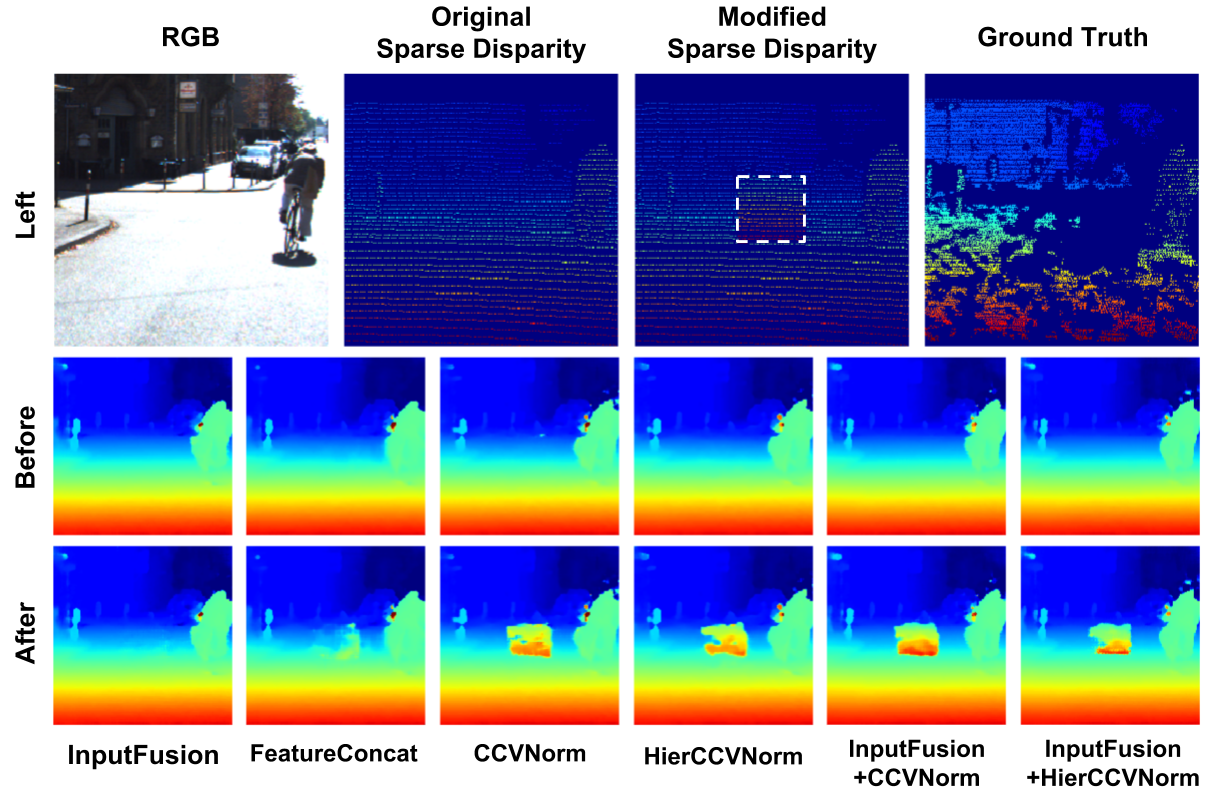

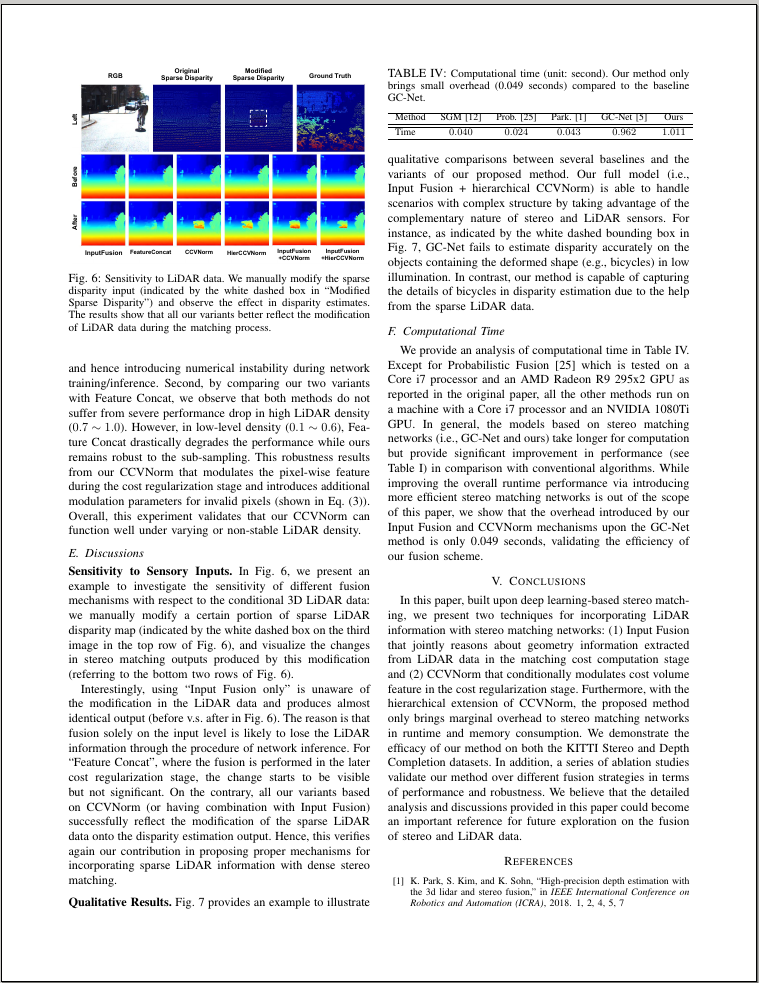

Sensitivity to LiDAR data. We manually modify the sparse disparity input (indicated by the white dashed box in “Modified Sparse Disparity”)

and observe the effect in disparity estimates. The results show that all our variants better reflect the modification of LiDAR data during the matching process.

3D LiDAR and Stereo Fusion using Stereo Matching Network with Conditional Cost Volume Normalization.

Tsun-Hsuan Wang, Hou-Ning Hu, Chieh Hubert Lin, Yi-Hsuan Tsai, Wei-Chen Chiu, Min Sun

Paper (arXiv)

Paper (arXiv)

Source Code

Source Code

@article{ccvnorm2019,

title={3D LiDAR and Stereo Fusion using Stereo Matching Network with Conditional Cost Volume Normalization},

author={Wang, Tsun-Hsuan and Hu, Hou-Ning and Lin, Chieh Hubert and Tsai, Yi-Hsuan and Chiu, Wei-Chen and Sun, Min},

journal={arXiv preprint arXiv:xxxx.xxxxx},

year={2019}}