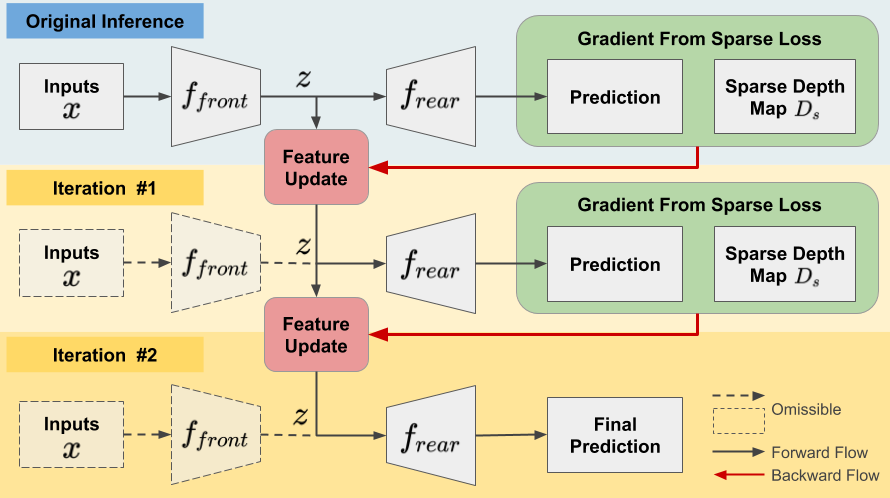

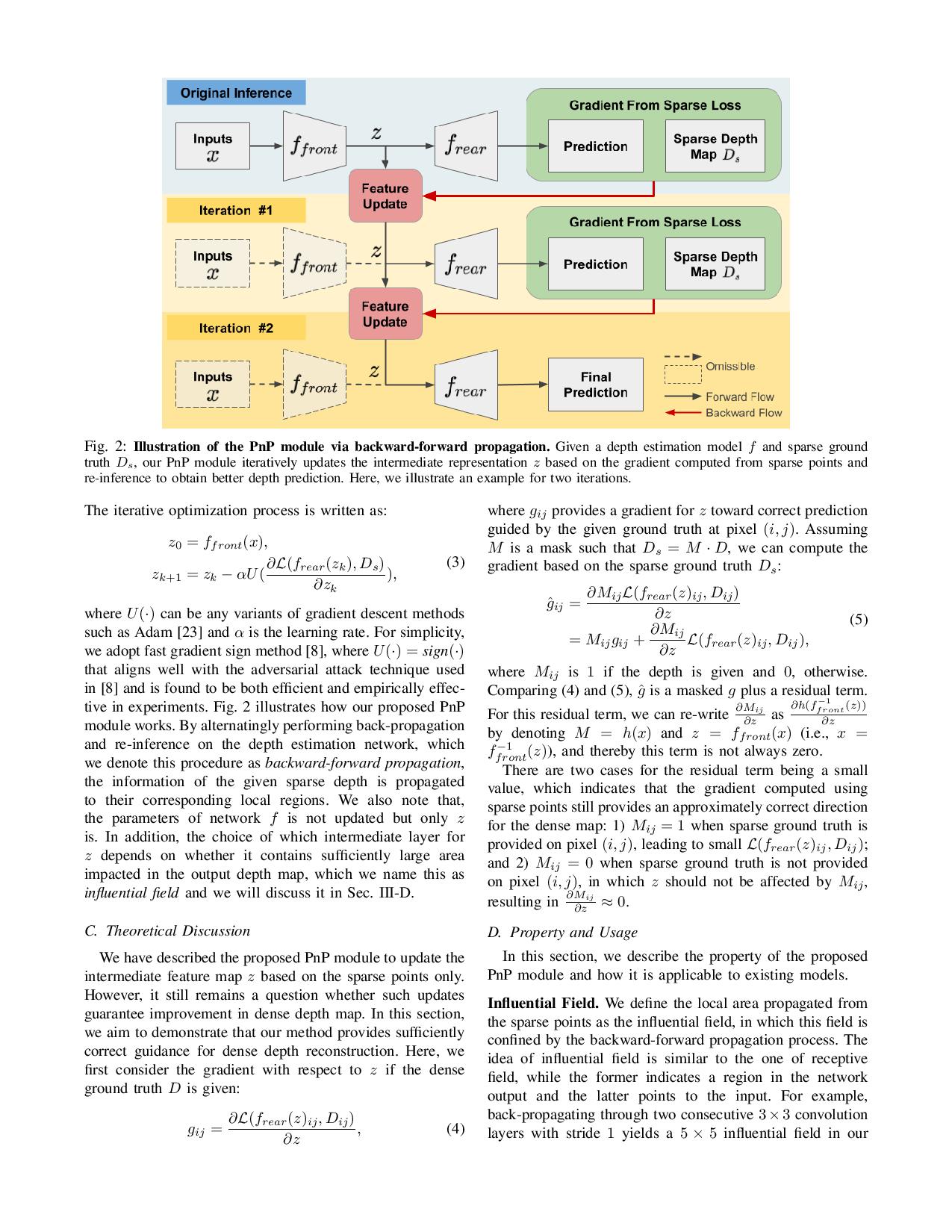

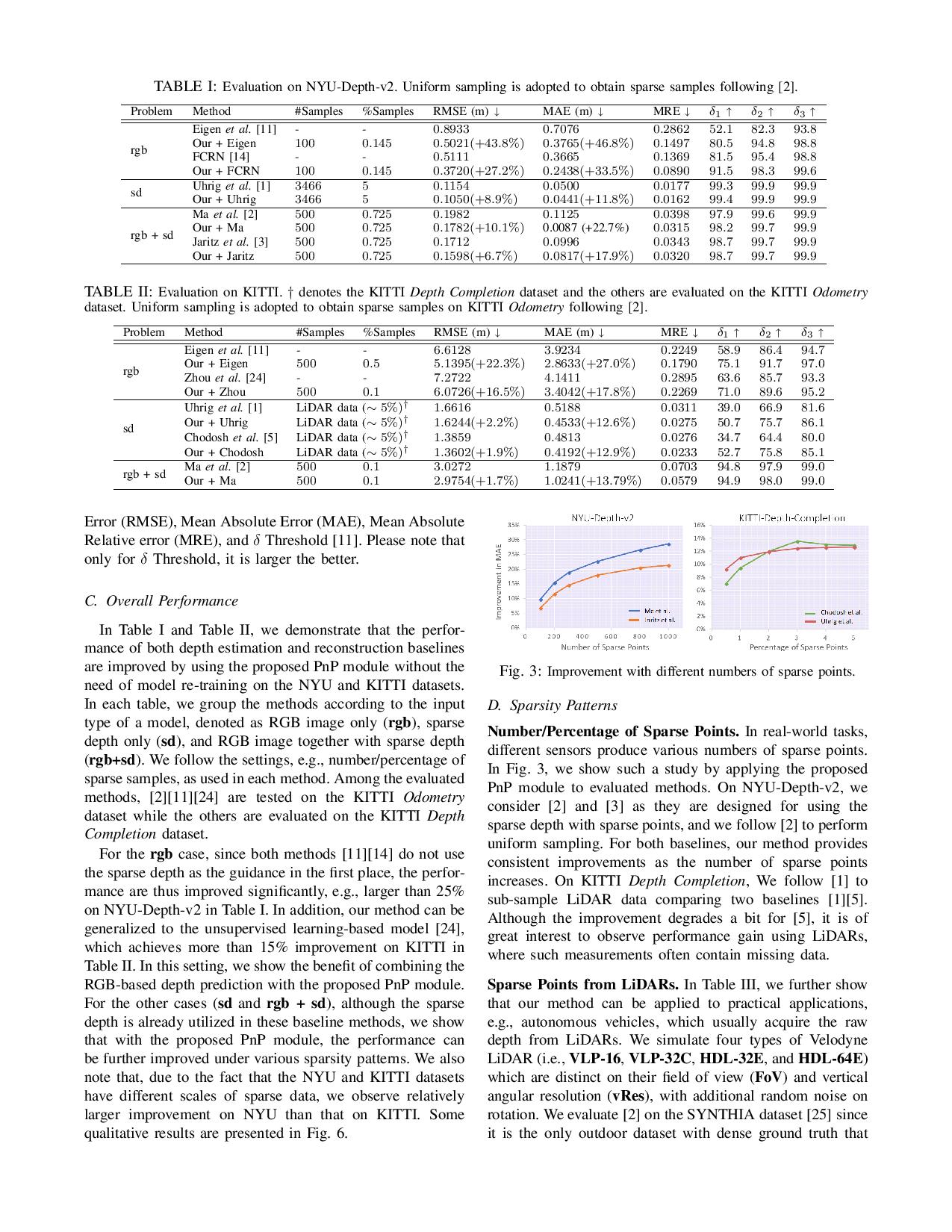

Plug-and-Play: Improve Depth Estimation via Sparse Data Propagation

Tsun-Hsuan Wang, Fu-En Wang, Juan-Ting Lin, Yi-Hsuan Tsai, Wei-Chen Chiu, Min Sun

Paper (arXiv)

Paper (arXiv)

Source Code

Source Code

@article{wang2018pnp,

title={Plug-and-Play: Improve Depth Estimation via Sparse Data Propagation},

author={Wang, Tsun-Hsuan and Wang, Fu-En and Lin, Juan-Ting and Tsai, Yi-Hsuan and Chiu, Wei-Chen and Sun, Min},

journal={arXiv preprint arXiv:1812.08350},

year={2018}}